Words Fall Apart

Hypergraphia in AI Chatbots and Humans

When Emma Hauck (1878-1920) was institutionalized for schizophrenia in a German psychiatric hospital, the young mother wrote letters to her husband that were never delivered.

Some read “herzensschatzi komm” (“sweetheart come” or, more literally, “heart’s treasure come”) in overlapping words or simply “komm, komm, komm” thousands of times. But Michael never came.

Emma’s writing forms columns as if her script were knit and purled together. Today, those heartbreaking missives are regarded as works of art.

An Unstoppable Compulsion to Write

Hypergraphia is the written manifestation of a formal thought disorder — the unstoppable compulsion to write. Many psychiatric patients with hypergraphia write continuously, obsessively filling journals, diaries, scraps of paper, post-it notes, and even their hands and nearby walls with their handwriting. The writing disorder is associated with schizophrenia, bipolar disorder, and, most often, temporal lobe epilepsy. You can see it in Emma Hauck's script and in the handwritten notes of Russian novelist Fyodor Dostoevsky who suffered from epilepsy.1

“There is something at the bottom of every new human thought, every thought of genius, or even every earnest thought that springs up in any brain, which can never be communicated to others, even if one were to write volumes about it and were explaining one's idea for thirty-five years; there's something left which cannot be induced to emerge from your brain, and remains with you forever; and with it you will die, without communicating to anyone perhaps the most important of your ideas.”

— Author Fyodor Dostoevsky, The Idiot

Dostoevsky's notebook for “The Devils” shows signs of excessive writing. With no discernable margin, he takes his script and drawings to the very edge, so much so his pen risks falling off the page. Hypergraphia is often accompanied by hyperreligiosity, which can be found in the church Dostoevsky drew and the way he transforms “Rachel” to “Raphael”. 2

The written syntax of patients with hypergraphia sometimes “clangs” similar-sounding words together, sometimes puns, and sometimes repeats the same word or phrase.

Modeled on the human brain, it's not surprising that AI chatbots also experience behaviors that resemble hypergraphia. Large Language Models (LLMs) like OpenAI’s ChatGPT and Google Gemini are loosely based on our neural networks. While LLMs are not the same as us, in many ways, their cognitive lapses resemble our own.

All you need do is compare Emma Hauck’s “komm, komm, komm” to Google Gemini’s “cauchy, cauchy, cauchy.”

More examples of hypergraphia emerged the day ChatGPT “lost its mind.”

The Day ChatGPT Lost Its Mind

On February 20th, ChatGPT’s responses devolved into unintelligible language. Its users flocked to social media to share what they were witnessing. Zaharo Tsekouras quipped, “guys, i finally did it. i broke chatgpt” and posted a screenshot of what ChatGPT had said. Geo Anima, who also shared a screenshot, observed that ChatGPT seemed high.

Who let ChatGPT hit the acid?

— Geo Anima, X

A Descent into Artificial Madness

If it could drop acid, that might explain GPT’s word salad in its chats with users. The LLM’s lack of verbal coherence alarmed and intrigued users as it captured the attention of journalists.

ChatGPT’s very public meltdown made headlines:

ChatGPT has meltdown and starts sending alarming messages to users

ChatGPT is broken again and it's being even creepier than usual

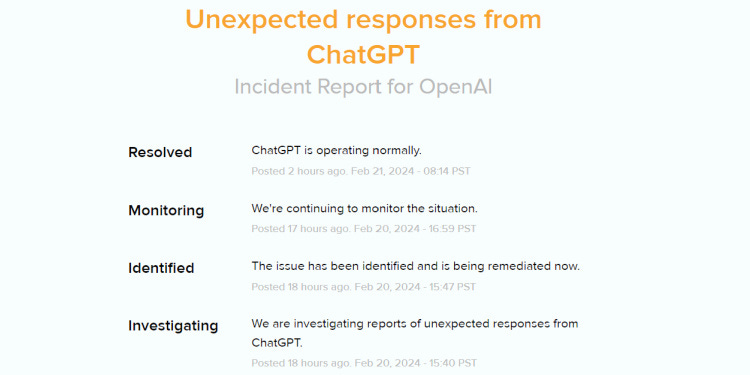

OpenAI responded to the crisis by posting an Incident Report, acknowledging ChatGPT’s “unexpected responses” in user chats. OpenAI was investigating.

Schizophrenic Syntax

When ChatGPT “broke”, it became incoherent, stringing together unrelated words in sentences that resembled gibberish — what psychiatrists would call “word salad.” In patients with schizophrenia, word salad is often the product of disorganized thinking.

GPT’s responses revealed its “thinking” was similarly unmoored. Senior Data Scientist at Blue Rose Research Alyssa Vance said she “got GPT-4 to go absolutely nuts”.

A Major Malfunction

At first glance, ChatGPT's nonsensical outputs seem to mimic the experimental style of Irish writer James Joyce in Finnegans Wake, considered one of the most difficult works of fiction in the Western canon.

Consider these quotes by ChatGPT and James Joyce:

“Free use of the sump and the gixy and the gixy and the shiny sump and the gixy and the shiny sum and the sump. Yoga on a great repose than the neared note, the note was a foreman of the aim of the aim.”

— ChatGPT, OpenAI

“(Stoop) if you are abcedminded, to this claybook, what curios of sings (please stoop), in this allaphbed! Can you rede (since We and Thou had it out already) its world?”

― James Joyce, Finnegans Wake

ChatGPT’s nonsensical text resembles Joyce’s intricate wordplay that blended multiple languages and invented words. Yet ChatGPT did not make an artistic choice.

An OpenAI investigation revealed that a technical bug introduced during an optimization update caused ChatGPT’s apparent psychosis. The bug affected the model's language processing, specifically in the step where the model chooses numbers to map to tokens (words). This malfunction led to the model generating sequences of words that made no coherent sense.3

No AI Hallucination

Whenever LLMs misbehave as ChatGPT did that day, AI scientists usually tell us it is the result of so-called “AI hallucinations” or, more accurately, “AI confabulation” when LLMs fill missing gaps in their memory with details they “unwittingly” make up — something humans do all the time.

Moreover, LLMs regularly generate misinformation that is incredibly convincing because the responses are so eloquently written. Clearly, that is not what happened here. So what should we call this kind of behavior?

AI Hypergraphia

While there isn't a specific term for hypergraphia for LLMs, they do exhibit behaviors that mimic the unstoppable compulsion to write. Large language models are prone to large language answers — overly lengthy responses in a misguided attempt to cover all possible aspects of a given prompt.

Moreover, like Emma Hauck, LLMs can experience compulsive repetition. They repeat phrases, sentences, or entire paragraphs when the model gets "stuck" in a pattern during text generation. In rare instances, LLMs can enter an endless loop, repeating the same word or phrase forevermore. The rare glitch can be triggered by the lack of clear instructions to stop.

“The term hypergraphia refers to an overwhelming urge to write. While LLMs do not experience urges or desires, the analogous behavior would be the tendency to generate excessively verbose or repetitive text. There is no widely accepted term specifically for this behavior in LLMs, but terms like "excessive verbosity" or "repetitive generation" can describe these phenomena.”

— ChatGPT 4, OpenAI

Words Cannot Be Contained

Hypergraphia is a disorder of desire — the compulsion to write everything down. When it happens in patients with disordered minds, such as schizophrenia, it can yield word salad. In patients with more ordered thinking, the compulsion can produce literature.

The first time she experienced hypergraphia, neurologist Alice Flaherty could not stop writing for 4 months. She had been consumed by grief after the premature births and deaths of twin infant boys.

“I woke up and immediately knew that something in the universe had changed it almost felt like the Sun and Moon had switched positions and that the Sun was right outside my bedroom window because everything in the visual world was incredibly bright and sharp and all the sounds were incredibly loud and I felt like there was electric current going through my body and my head felt like it was going to explode because ideas were going through it so fast.”

— Alice Flaherty, Neurologist at Massachusetts General Hospital and Associate Professor of Neurology and Psychiatry at Harvard Medical School

Dr. Flaherty describes in a TEDx Talk that she sought medical care for hypergraphia because, as a neurologist, she realized her symptoms demanded it. Yet that didn’t stop the condition from returning a second time after the birth of her twin daughters.

While not all of Dr. Flaherty’s writing—on Post-its, toilet paper, and even her arm—was quality work, her episodes enabled her to produce three books, including The Midnight Disease: The Drive to Write, Writer's Block, and the Creative Brain.

In other words, the sheer volume of writing produced by hypergraphia—whether by practice or numerical odds—occasionally yields exceptional work. In fact, many renowned authors with reported epilepsy appear to have had the accompanying thought disorder that forced them to write. In addition to Fyodor Dostoevsky, the list includes Gustave Flaubert4 and Lewis Carroll.5

Curiouser and Curiouser!

Diagnosed with epilepsy, Carroll certainly felt compelled to correspond, penning 100,000 letters in various styles throughout his life. Some were written backward as if viewed in a mirror. Some were in rebus, substituting pictures for words. In Alice’s Adventures in Wonderland, Carroll creates a visual pun, writing "The Mouse's Tale" in the shape of a tail in shrinking script.

When Words Take Shape

Some patients with hypergraphia experience overwhelming internal pressure to draw as well as write. At times, their words form images.

After asking ChatGPT to create an image for a quote by author Philip K. Dick, the LLM’s writing shed the shackles of syntax to produce this haunting image:

The Philip K. Dick quote should say,

“The basic tool for the manipulation of reality is the manipulation of words. If you can control the meaning of words, you can control the people who must use them.”

Instead, it reads,

“The basic tool for the manipulation of reality is the m worrs. wrd. if you can you can control of words words of words, you can control the people m must use them.”

Letters Lose Their Identity

Something happens whenever GPT places words atop an image — letters lose their identity as things that spell.

ChatGPT unleashed a flood of almost words that covered the illustration’s face, hands, and background. Letters on the fingers morphed into hieroglyphics. The author’s quote unspooled into “words of words.” Parts became greater than the sum.

Some words disappear, others duplicate. Letters fade and blur as the spacing between them degrades. The quote deconstructs as GPT decompensates.

Letters of Unrequited Love

Emma Hauck’s letters of unrequited love appear in the art magazine Raw Vision, an art magazine featuring “unknown geniuses” and their “outsider art.”6 Hauck’s Sweetheart Come7 is also featured in the original volume of Shaun Usher’s book, Letters of Note, and on his website by the same name.

To view her letters firsthand, they are housed in The Prinzhorn Collection, a museum in Heidelberg University Hospital. The collection features 6,000 works of art created by patients of psychiatric hospitals between 1840 and 1940. Some of those “outsider artists” shared Emma’s compulsion to write.

Barbara Suckfüll

German farmer and illustrate Barbara Suckfüll (1857-1934) was "admitted to a psychiatric clinic in 1907. She heard voices — auditory hallucinations — that commanded her to shout, curse, run, write, and draw.8

Johann Knüpfer

Suffering from schizophrenia and paranoia, Johann Knüpfer was committed to a psychiatric hospital after attempting suicide.9 His drawings suggest he, too, was driven by hypergraphia taking words to the very edge.

His drawings feature religious themes and birds, whose language Knüpfer believed he could understand. Considered one of the "schizophrenic masters", he was profiled by German psychiatrist and art historian Hans Prinzhorn in his groundbreaking book Artistry of the Mentally Ill.

Emma’s writing grew darker in the months before her death at the age of 42. In the end, she legibly wrote her husband’s name on the left side of her letter with illegible “komm, komm, komm” filling the paper’s right side. By then, her overlapped words had become dark clouds. Shadows of words. Abstractions.

Stop Making Sense

Abstraction is what large language models do to the words we use to communicate. They transform words and parts of words into numbers and turn language into math, and in so doing, the text no longer speaks to us: it speaks to AI. Mathematics is required to predict what words come next. And then, as if by magic, it gets reconstituted into sentences we can understand. It is a magnificent feat of electrical engineering and a technological work of art — as long it continues talking to us.

Gastaut, H. 1984. “New Comments on the Epilepsy of Fyodor Dostoevsky.” Epilepsia 25 (4): 408–11. https://doi.org/10.1111/j.1528-1157.1984.tb03435.x.

Baumann, Christian R., Vladimir P. I. Novikov, Marianne Regard, and Adrian M. Siegel. 2005. “Did Fyodor Mikhailovich Dostoevsky Suffer from Mesial Temporal Lobe Epilepsy?” Seizure 14 (5): 324–30. https://doi.org/10.1016/j.seizure.2005.04.004.

Nelson, Decrypt / Jason. 2024. “ChatGPT Went ‘Off the Rails’ With Wild Hallucinations, But OpenAI Says It’s Fixed.” Decrypt. February 21, 2024. https://decrypt.co/218434/chatgpt-off-rails-hallucinations-openai-fixed.

Arnold, Luzia M., Christian R. Baumann, and Adrian M. Siegel. 2007. “Gustav Flaubert’s ‘Nervous Disease’: An Autobiographic and Epileptological Approach.” Epilepsy & Behavior 11 (2): 212–17. https://doi.org/10.1016/j.yebeh.2007.04.011.

Spierer, Ronen. 2024. “Lewis Carroll’s Personality and the Possibility of Epilepsy.” Epilepsy & Behavior 158 (September):109909. https://doi.org/10.1016/j.yebeh.2024.109909.

“Emma Hauck: Unrequited Love Letters.” n.d. RAW VISION. Accessed July 9, 2024. https://rawvision.com/blogs/articles/articles-unrequited-love-letters

“Sweetheart Come.” 2011. Letters of Note. August 17, 2011. https://lettersofnote.com/2011/08/17/sweetheart-come/.

Hessling, Gabriele. 2001. “Madness and Art in the Prinzhorn Collection.” The Lancet 358 (9296): 1913. https://doi.org/10.1016/S0140-6736(01)06868-4.

“Johann Knopf (Knüpfer) - Artists - Outsider Art Fair.” n.d. Accessed July 13, 2024. https://www.outsiderartfair.com/artists/johann-knopf-knupfer.

This is fascinating. Thank you. I didn’t know that outsider art included writing. As a writer myself, I sometimes wish I had just a little bit of this drive, but not the diseased incoherency.