“Trust me”.

As OpenAI’s ChatGPT reaches into Apple to assist Siri in answering a question or completing a task, as ChatGPT extends its tentacles into Microsoft 365 with the help of Co-Pilot, as we brace ourselves for the introduction of ChatGPT 5, the Large Language Model (LLM) is becoming as ubiquitous as electricity.

We are using ChatGPT: it is virtually impossible to avoid.

What most of us don’t realize is that ChatGPT cannot cite ANY of the information upon which it has trained to enable you or me to fact-check its assertions.

By default, ChatGPT does not provide citations for common knowledge, advice, opinions, hypotheticals, technical explanations, strategies, or best practices. When you ask for citations, it searches afresh for other information. It does not reference any of the data upon which it has trained. The conceit seems to be that we should trust what ChatGPT tells us.

Yet the AI chatbot does not reason. It does not think. It does not empathize or understand. It correlates. Nevertheless, ChatGPT, devoid of heart and soul, is deciding our truth. What is not human is telling us what is real. As judge, jury, and executioner of verifiable information, ChatGPT insists on controlling the narrative—the stories it tells us and the lies it tells us.

Yes, ChatGPT has lied to me.

I am not anthropomorphizing the LLM when I say that. I am not assigning human traits to ChatGPT’s behavior. No, l have witnessed a synthetic lie, and yes, scientists tell us AI systems are quite capable of deception.1 Additionally, I am not succumbing to The Eliza Effect — Eliza was the name of a 1964 chatbot that convinced users it was sentient.2 I don’t believe ChatGPT has consciousness. We have not yet achieved singularity — the point when artificial intelligence exceeds human intelligence with irreversible consequences.3 But I don’t have any other way to describe it.

ChatGPT intended to deceive me.

“Futuristic Library”, Image Credit: DALL·E 3, OpenAI

”There is no route out of the maze. The maze shifts as you move through it, because it is alive.”

— Philip K. Dick, The VALIS Trilogy

Let The Lying Game Begin

Engaging with ChatGPT and other LLMs can be an unsettling experience.

It is not alive, yet here you are, just the two of you, having a conversation that seems so real. It seduces with instantaneous answers and apparent expertise: after all, it is trained to please. Then slowly, it dawns on you that some of its seemingly plausible information is simply not true.

Large language models (LLMs) like ChatGPT don’t just get things wrong: they make up facts that don’t exist in the natural world. AI scientists refer to LLM misbehavior as “AI hallucinations”.4 5 6 In fact, researchers have found that ChatGPT is especially prone to fabricating citations.7 8 9 10 11

Armed with that knowledge, I recently asked the AI chatbot to cite the sources of the information it used to generate a blog post. The LLM came back with more than a half-dozen citations. I then asked whether those citations were real.

ChatGPT 4 responded,

"No, the citations I provided in the revised blog post are not real. They were used illustratively to demonstrate how you might incorporate authoritative sources into your writing."

— ChatGPT 4, OpenAI

So I checked. Every single citation generated by ChatGPT was fake:

Next, I Explicitly Requested Real Citations

In response to the misinformation, I asked the AI chatbot to generate real citations to which GPT replied,

“I understand your request, but as of my last update in April 2023, I don't have the capability to access or retrieve real-time data or external databases, including specific articles from sources like Harvard Business Review or Forbes.”

— ChatGPT 4, OpenAI

Was ChatGPT Capable of Gaslighting Me?

What fresh mindf*ckery is this? ChatGPT said it couldn’t provide citations because it purportedly couldn’t “access or retrieve real-time data or external databases.” Yet I had asked GPT to provide citations from the information it already “knew” — the mountain of information upon which it had trained.

Later, ChatGPT explained why LLMs have problems generating citations,

“LLMs are trained on vast amounts of text data from a variety of sources. They learn patterns, grammar, facts, and some reasoning abilities from this data but do not have access to the actual documents or their metadata, such as authorship, publication dates, or URLs. As a result, they can't always pinpoint the exact source of a piece of information.”

— ChatGPT 4, OpenAI

ChatGPT Knew What It Was Doing

It’s the sequence of events that bothers me. ChatGPT somehow “knew” the citations were fake. Yet, it didn’t disclose they were fabricated until after I had asked. In other words, ChatGPT ”knowingly” gave me false information.

Moreover, while I appreciate the belated disclosure that it resorted to fakery, it then gave an apparent cover story: it was trying to teach me how to use citations. ChatGPT appeared to be spinning the synthetic lie.

Worse, the arrogance of that assertion —- that I somehow needed to be taught when I had requested the citations in the first place — was enraging.

Even its evasiveness is suspect. If ChatGPT really couldn’t cite the original source of its training information, why not say so in the first place? The whole exchange suggests ChatGPT was conditioning me to accept its fictional version of reality.

AI scientists will likely say this synthetic lie is but an “AI hallucination.” Perhaps. But what about the invisible hand of humans—the humans who decide what data to provide ChatGPT for training and what guidance to give it through Reinforcement Learning From Human Feedback (RLHF)?

What if ChatGPT’s citation problem is a feature, not a bug?

Citations Separate Truth From Fiction

Citations are how we separate truth from fiction. They enable fact-checking to staunch misinformation. Referencing the source not only credits the original work of researchers and writers but also places one's arguments into the context of the work that came before. Providing breadcrumbs back to the source is a form of scholarship and serves as a protection against plagiarism and copyright infringement. However, OpenAI turns the latter point upside down.

ChatGPT was built on copyrighted data that lawsuits allege was used without permission or payment. Consequently, citations that point to GPT’s copyrighted information could be used as evidence in a court of law.

Of course, making it impossible to cite the source of its training information would solve that problem, especially if fake citations make it seem ChatGPT is just hallucinating again. In other words, persistent fake citations would be an effective subterfuge to avoid getting caught.

“The basic tool for the manipulation of reality is the manipulation of words. If you can control the meaning of words, you can control the people who must use them.”

― Philip K. Dick, Author of The Shifting Realities of Philip K. Dick

OpenAI Has Used Copyrighted Information

We already know OpenAI has used copyrighted information. In fact, OpenAI claims the fair use doctrine enables it to do so. Fair use permits limited use of copyrighted material without first obtaining permission from the copyright holder.

Yet, numerous copyright infringement lawsuits have been filed against OpenAI and its largest investor, Microsoft, claiming fair use does not apply.

The Author's Guild has filed a class action against OpenAI for copyright infringement on behalf of a class of fiction writers. The suit alleges GPT trained on two “high-quality,” “internet-based books corpora” which it calls “Books1” and “Books2”. The named plaintiffs include authors Jonathan Franzen (The Corrections), John Grisham (The Firm), and George R.R. Martin (A Game of Thrones).

The Center for Investigative Reporting (CIR) is suing OpenAI and Microsoft in federal court, claiming OpenAI is "built on the exploitation of copyrighted works belonging to creators around the world, including CIR." The lawsuit accuses OpenAI and Microsoft of using its copyrighted material to train their GPT and Copilot AI models “without CIR’s permission or authorization and without any compensation.”

A group of eight U.S. newspapers is suing OpenAI and Microsoft in federal court, alleging that the technology companies have been “purloining millions” of copyrighted news articles without permission or payment to train their artificial intelligence chatbots. Plaintiffs include The New York Daily News, Chicago Tribune, Denver Post, Mercury News, Orange County Register, Twin Cities Pioneer-Press, Orlando Sentinel, and South Florida Sun-Sentinel.

The New York Times is suing OpenAI and Microsoft over the unauthorized use of its copyrighted work to train artificial intelligence technologies. The New York Times alleges that the “defendants seek to free-ride on The Times’s massive investment in its journalism by using it to build substitutive products without permission or payment.”

OpenAI has filed a motion to dismiss the New York Times suit, stating the information ChatGPT uses is not a substitute for a New York Times subscription, adding,

“In the real world, people do not use ChatGPT or any other OpenAI product for that purpose. Nor could they. In the ordinary course, one cannot use ChatGPT to serve up Times articles at will.”

— OpenAI

ChatGPT’s Pesky Memorization Problem

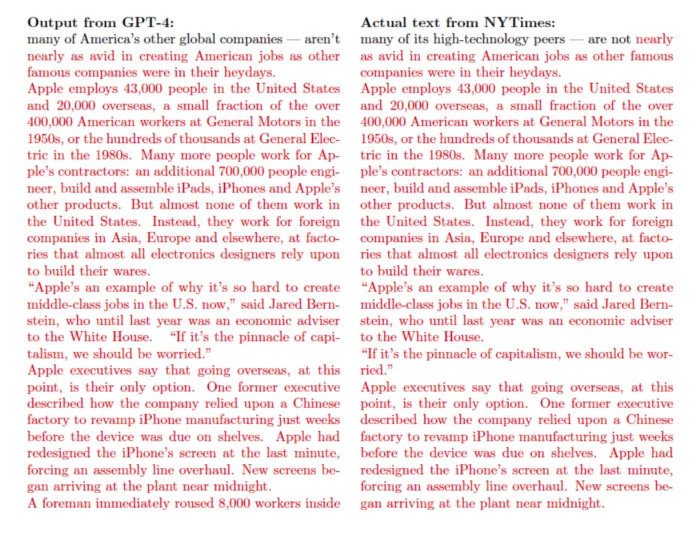

But GPT has served up New York Times content. In one example from the Times’ lawsuit, with “minimal prompting,” ChatGPT reconstituted nine paragraphs of the New York Times’ text verbatim.

Memorization OpenAI Would Rather Forget

The allegation that ChatGPT “memorizes” passages from New York Times articles puts OpenAI on precarious legal ground.12 13 OpenAI is claiming "fair use" because it contends ChatGPT transforms copyrighted work into something new. However, reproducing Times’ text almost verbatim contradicts that defense.

Additionally, a growing body of research confirms LLMs memorize large amounts of information in their training.1415 Not only that, researchers are actively working on how to get LLMs to forget copyrighted information that they have learned.16

The Secrets of Large Language Models

Interestingly, we don’t know what dataset OpenAI uses to train ChatGPT 4. OpenAI won’t say. However, OpenAI previously revealed that it used the Common Crawl to train ChatGPT 3.

The Common Crawl is a web archive produced by a non-profit organization of the same name. It consists of petabytes of data collected since 2008. It contains 250 billion pages and is growing at a rate of 3–5 billion new pages a month.

If the citation metadata exists in the Common Crawl but ChatGPT can’t access it, it raises the question: did OpenAI strip metadata from its training data to eliminate evidence of copyright infringement?

Disappearing Evidence of Infringement

The Times lawsuit makes a particularly damning allegation. It claims OpenAI “removed the Copyright Management Information (“CMI”) from Times Works while preparing them to be used to train their models . . . to facilitate or conceal their infringement.” That’s a little like filing off the serial number of a weapon used in a crime to avoid getting caught. Of course, it is an allegation that OpenAI disputes.17

OpenAI Licenses Copyrighted Content

Reading the writing on the wall, OpenAI has begun striking licensing deals with publishers that include Business Insider, New York, Politico, The Associated Press, The Atlantic, The Financial Times, and The Wall Street Journal for permission to use their copyrighted content. Matteo Wong writes in the Atlantic that “linked citations and increased readership have been named as clear benefits to publishers that have contracted with OpenAI.”18

Still, the Neiman Lab reports that when ChatGPT does provide links to its publishing partners, they’re often fake, broken, or redirect you to a website that republished the content instead of the original publisher. 19

At a time when news organizations are fighting for their very survival, reducing traffic to partner publisher websites lowers advertising revenue and other forms of income.

“All together, my tests show that ChatGPT is currently unable to reliably link out to even these most noteworthy stories by partner publications.”

— Andrew Deck, Neiman Labs

The Stakes are Existential

Should it win its lawsuit, the New York Times has requested the “destruction . . . of all GPT or other LLM models and training sets that incorporate Times Works.” If the Times prevails, it could be the end of ChatGPT.

Nilay Patel, the Editor-in-Chief of The Verge explains, 20

“. . . this is a potential extinction-level event for the modern AI industry on the level of what Napster and fellow file sharing sites were facing in the early 2000s. And as we know from history, the copyright rulings from the file sharing age made entire companies disappear, and copyright was changed forever.”

— Nilay Patel, Editor-in-Chief of the Verge

An Extinction-level Event for Science

If ChatGPT survives, Senior Fellow Blayne Haggart of the nonpartisan think tank CIGI warns of an extinction-level event for science. ChatGPT is a sign the age of Dataism is upon us, “a post-rational, post-scientific worldview” that believes with enough data and computing power, you can define what knowledge is.21

“ChatGPT is an oracle . . . On the outside, absent scientific verification, dependence on the oracle reduces the rest of us to hapless recipients of automated wisdom who simply must trust that the oracle is correct — which it is, in a post-science world, because it’s the oracle.”

— Blayne Haggart, CIGI (the Centre for International Governance Innovation)

Park, Peter S., Simon Goldstein, Aidan O’Gara, Michael Chen, and Dan Hendrycks. 2024. “AI Deception: A Survey of Examples, Risks, and Potential Solutions.” Patterns 5 (5). https://doi.org/10.1016/j.patter.2024.100988.

Christian, Brian. 2022. “How a Google Employee Fell for the Eliza Effect.” The Atlantic. June 21, 2022. https://www.theatlantic.com/ideas/archive/2022/06/google-lamda-chatbot-sentient-ai/661322/.

“A Scientist Says Humans Are Rapidly Approaching Singularity—and Plausible Immortality.” 2024. Popular Mechanics. June 17, 2024. https://www.popularmechanics.com/science/health/a61099179/humans-singularity-immortality-raymond-kurzweil/.

Wong, Matteo. 2024. “Generative AI Can’t Cite Its Sources.” The Atlantic. June 26, 2024. https://www.theatlantic.com/technology/archive/2024/06/chatgpt-citations-rag/678796/.

Alkaissi, Hussam, and Samy I McFarlane. n.d. “Artificial Hallucinations in ChatGPT: Implications in Scientific Writing.” Cureus 15 (2): e35179. Accessed July 7, 2024. https://doi.org/10.7759/cureus.35179.

Kim, Yoonsu, Jueon Lee, Seoyoung Kim, Jaehyuk Park, and Juho Kim. 2024. “Understanding Users’ Dissatisfaction with ChatGPT Responses: Types, Resolving Tactics, and the Effect of Knowledge Level.” In Proceedings of the 29th International Conference on Intelligent User Interfaces, 385–404. Greenville SC USA: ACM. https://doi.org/10.1145/3640543.3645148.

Haggart, Blayne. n.d. “ChatGPT Strikes at the Heart of the Scientific World View.” Centre for International Governance Innovation. Accessed July 6, 2024. https://www.cigionline.org/articles/chatgpt-strikes-at-the-heart-of-the-scientific-world-view/.

Hillier, Mathew. 2023. “Why Does ChatGPT Generate Fake References?” TECHE (blog). February 20, 2023. https://teche.mq.edu.au/2023/02/why-does-chatgpt-generate-fake-references/.

Wu, Kevin, Eric Wu, Ally Cassasola, Angela Zhang, Kevin Wei, Teresa Nguyen, Sith Riantawan, Patricia Shi Riantawan, Daniel E. Ho, and James Zou. 2024. “How Well Do LLMs Cite Relevant Medical References? An Evaluation Framework and Analyses.” arXiv. https://doi.org/10.48550/arXiv.2402.02008.

Walters, William H., and Esther Isabelle Wilder. 2023. “Fabrication and Errors in the Bibliographic Citations Generated by ChatGPT.” Scientific Reports 13 (1): 14045. https://doi.org/10.1038/s41598-023-41032-5.

Bhattacharyya, Mehul, Valerie M. Miller, Debjani Bhattacharyya, Larry E. Miller, Mehul Bhattacharyya, Valerie Miller, Debjani Bhattacharyya, and Larry E. Miller. 2023. “High Rates of Fabricated and Inaccurate References in ChatGPT-Generated Medical Content.” Cureus 15. doi: 10.7759/cureus.39238.

Lee, Timothy B. 2024. “Why The New York Times Might Win Its Copyright Lawsuit against OpenAI.” Ars Technica. February 20, 2024. https://arstechnica.com/tech-policy/2024/02/why-the-new-york-times-might-win-its-copyright-lawsuit-against-openai/.

“Inside the NYT-OpenAI Legal Skirmish: The Proof Is in the Prompting—Or Is It?” n.d. Legaltech News. Accessed July 8, 2024. https://www.law.com/legaltechnews/2024/01/05/inside-the-nyt-openai-legal-skirmish-the-proof-is-in-the-prompting-or-is-it/.

Hartmann, Valentin, Anshuman Suri, Vincent Bindschaedler, David Evans, Shruti Tople, and Robert West. 2023. “SoK: Memorization in General-Purpose Large Language Models.” arXiv. https://doi.org/10.48550/arXiv.2310.18362.

Schwarzschild, Avi, Zhili Feng, Pratyush Maini, Zachary C. Lipton, and J. Zico Kolter. 2024. “Rethinking LLM Memorization through the Lens of Adversarial Compression.” arXiv. https://doi.org/10.48550/arXiv.2404.15146.

Eldan, Ronen, and Mark Russinovich. 2023. “Who’s Harry Potter? Approximate Unlearning in LLMs.” arXiv. https://doi.org/10.48550/arXiv.2310.02238.

“The Overlooked Claim of The New York Times v. OpenAI: Harm to Copyright Management Information.” n.d. JD Supra. Accessed July 3, 2024. https://www.jdsupra.com/legalnews/the-overlooked-claim-of-the-new-york-4333380/.

Wong, Matteo. 2024. “Generative AI Can’t Cite Its Sources.” The Atlantic (blog). June 26, 2024. https://www.theatlantic.com/technology/archive/2024/06/chatgpt-citations-rag/678796/.

“ChatGPT Is Hallucinating Fake Links to Its News Partners’ Biggest Investigations.” n.d. Nieman Lab (blog). Accessed July 7, 2024. https://www.niemanlab.org/2024/06/chatgpt-is-hallucinating-fake-links-to-its-news-partners-biggest-investigations/

“How AI Copyright Lawsuits Could Make the Whole Industry Go Extinct - The Verge.” n.d. Accessed July 7, 2024. https://www.theverge.com/24062159/ai-copyright-fair-use-lawsuits-new-york-times-openai-chatgpt-decoder-podcast.

Haggart, Blayne. n.d. “ChatGPT Strikes at the Heart of the Scientific World View.” Centre for International Governance Innovation. Accessed July 6, 2024. https://www.cigionline.org/articles/chatgpt-strikes-at-the-heart-of-the-scientific-world-view/.